Apple’s Vision Pro Has Avatar Webcam

Technology giant Apple has introduced a new headset called the “Vision Pro,” which is expected to revolutionize the way we think about virtual and augmented reality. In addition to offering immersive experiences, Apple says that Vision Pro will be able to run most iPad and iOS apps out of the box with no changes. For video chat apps like Zoom, Messenger, Discord, and others, the company says that an ‘avatar webcam’ will be supplied to apps, making them automatically able to handle video calls between the headset and other devices.

Overview

The article covers the following topics:

- Introduction to Apple’s Vision Pro headset

- How Vision Pro can run most iPad and iOS apps out of the box

- How the ‘avatar webcam’ feature works for video chat apps

- Availability of apps on the headset’s App Store

- Capabilities of the headset’s ‘Persona’ feature

- Limits on the headset’s front cameras usage for apps

- Possibilities of incorporating AR face filters in apps

- Inputs from headset that developers can expect

- Requirement of upgraded ARKit for existing ARKit apps on Vision Pro

- Reviewer’s experiences with the Vision Pro headset

- The headset’s design, fit, and lenses

- Interface and app options

- Eye tracking as a major part of the interface

- Compatibility of keyboards and trackpads

- Plans for Vision Pro Developer Labs

Capabilities of the Avatar Webcam Feature for Video Chat Apps

Apple’s Vision Pro headset will be supplied with an “avatar webcam” feature that will allow video chat apps like Zoom, Messenger, Discord, and Google Meet, to handle video calls between the headset and other devices without any changes to how the app handles camera input. Instead of a live camera view, Vision Pro provides a view of the headset’s computer-generated avatar of the user which Apple calls a Persona. Developers can expect up to two inputs from the headset so that any apps expecting two-finger gestures (like pinch-zoom) should work just fine, but three fingers or more won’t be possible.

Availability of Apps on the Headset’s App Store

According to Apple, all suitable iOS and iPad OS apps will be available on the headset’s App Store on day one. Most apps don’t need any changes at all, and the majority should run on the headset right out of the box. Developers will be able to opt-out of having their apps on the headset if they’d like. It’s unfortunate that existing ARKit apps won’t work out of the box on Apple Vision Pro and developers will need to upgrade to ARKit and other tools to make their apps ready for the headset.

Possibilities of Incorporating AR Face Filters in Apps

In addition to providing a view of the headset’s computer-generated avatar, there’s potential for ‘AR face filters’ in apps like Snapchat and Messenger that will work correctly with the user’s Apple Vision Pro avatar, with the app being none-the-wiser that it’s actually looking at a computer-generated avatar rather than a real person. With the headset’s front cameras scanning the user’s face to create a model, the model is animated according to head, eye, and hand inputs tracked by the headset.

Possibilities of Eye Tracking as a Major Part of the Interface

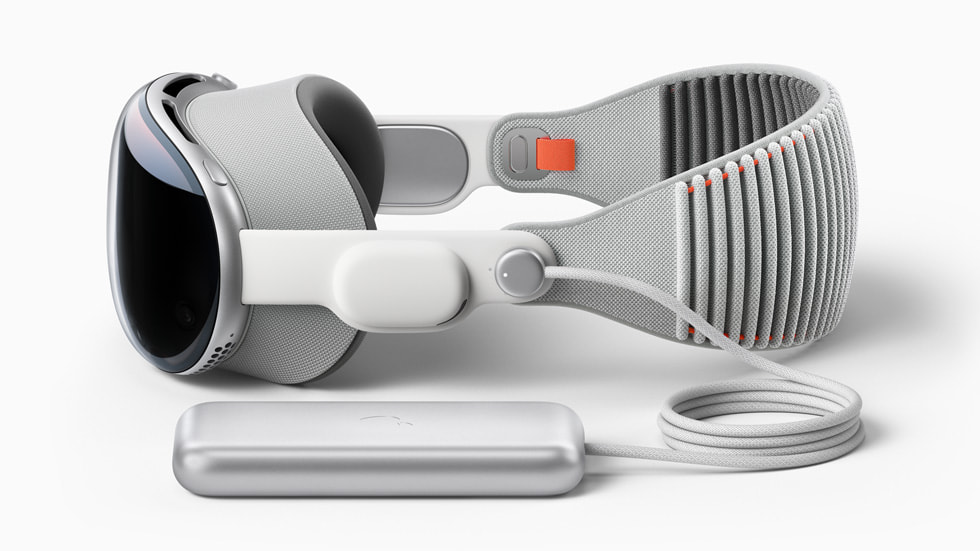

Apple’s headset uses eye tracking constantly in its interface, which could be one of the reasons for the external battery pack for the headset. Eye tracking as a major part of the interface felt transformative, in a way that was expected years ago. It remains to be seen how it will feel in longer sessions, but it could lead to a great user experience because of its ease of use on the headset.

Conclusion

Apple’s Vision Pro headset is an innovative headset that offers immersive experiences and can run most iPad and iOS apps. The headset’s avatar webcam feature for video chat apps is a game-changer, and developers can expect up to two inputs from the headset. With the headset’s front cameras scanning the user’s face to create a model and its use of eye tracking constantly in its interface, Vision Pro offers a unique and transformative experience.

FAQ

Q1. Will developers be able to test their apps before the release of Vision Pro to the public?

A. Yes, Apple plans to open ‘Apple Vision Pro Developer Labs’ in a handful of locations around the world starting this Summer. Developers will be able to submit a request to have their apps tested on Vision Pro, with testing and feedback being done remotely by Apple.

Q2. Will developers need new tools to build for the headset?

A. Yes, Apple will make available a visionOS SDK and updated versions of Reality Composer and Xcode by the end of June to support development on the headset. Additionally, new Human Interface Guidelines will be available to help developers follow best practices for spatial apps on Vision Pro.

Q3. What is the headset’s sleek design and fit?

A. The compact headset reminds the reviewer of an Apple-designed Meta Quest Pro, with a comfy and stretchy back strap, a dial to adjust the rear fit, and a top strap for stability.

Q4. What lenses does the headset use?

A. The headset does not support glasses, instead relying on Zeiss custom inserts to correct wearers’ vision. Apple did manage, through a setup process, to easily find lenses that fit the reviewer’s vision well enough so that everything seemed crystal clear, which is not an easy task.

Q5. Will the Vision Pro headset work with keyboards and trackpads?

A. Yes, it will work with Apple’s Magic Keyboard and Magic Trackpad and Macs, but not with iPhone and iPad or Watch touchscreens — not now, at least.