safe

The Rise of Social Media and the Threat of Online Censorship

Social Media and the Democratization of Information

At Morningpicker, we recognize the importance of social media in facilitating online discussions and debates. However, we also acknowledge the need for regulation to protect users from harm. The online safety bill aims to hold social media companies accountable for the content they host, with potential fines of up to 10% of turnover for non-compliance. While this may seem like a reasonable approach, critics worry that it could lead to over-censorship, where lawful posts are removed due to automated systems, stifling public discourse and free speech.

The Impact of the Online Safety Bill on Social Media Platforms

The online safety bill has significant implications for social media platforms, which will be required to proactively tackle problematic content or risk facing fines. While this may seem like a reasonable approach, it raises concerns about the potential for over-censorship and the impact on public discourse. At Morningpicker, we believe that social media platforms must navigate these complexities carefully, ensuring that they strike the right balance between regulation and user freedom.

According to a recent report, the online safety bill could have serious implications for public discourse in the UK, particularly for refugee charities and humanitarian organizations that rely on social media to raise awareness. The removal of lawful posts could have serious consequences, including stifling public debate and limiting the ability of organizations to raise awareness about important issues.

The Consequences of Over-Censorship and the Need for Balance

The consequences of over-censorship are far-reaching, with serious implications for public discourse and free speech. At Morningpicker, we believe that social media platforms must navigate these complexities carefully, ensuring that they strike the right balance between regulation and user freedom. This requires a nuanced approach that takes into account the complexities of online discourse and the need for regulation to protect users from harm.

According to Dr. Monica Horten, a policy manager at Open Rights Group, the online safety bill could force social media companies into overzealous policing of their platforms. “The chances are they would rely on artificial intelligence techniques, or content moderation systems based on perceptual hashing, which effectively compares posts taken from social media feeds against a database of images,” she said. “Both options entail risks of over-blocking. Lawful posts could be censored, with serious implications for public discourse in the UK.”

The Dark Side of Social Media and the Rise of Extremism

The Spread of Extremist Content on Social Media

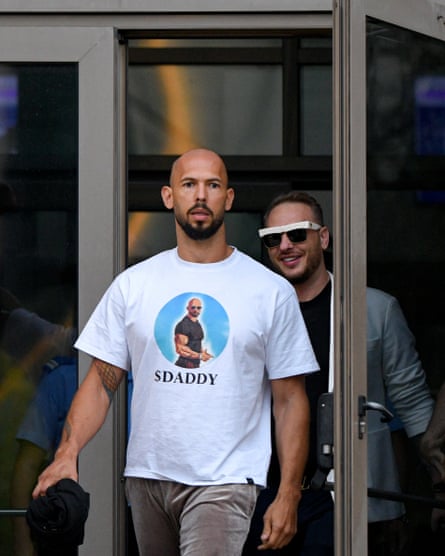

Social media platforms have been criticized for allowing extremist content to spread, with some users racking up millions of followers and generating significant revenue. The use of algorithms to prioritize engagement and revenue over safety has created an environment where toxic content can thrive.

At Morningpicker, we recognize the risks associated with extremist content on social media and the need for regulation to protect users from harm. However, we also acknowledge the complexities of online discourse and the need for a nuanced approach that balances regulation with user freedom.

The Role of Algorithms in Amplifying Extremist Voices

Algorithms used by social media platforms to prioritize content can inadvertently amplify extremist voices, creating echo chambers that fuel further polarization. The need for more transparency and accountability in algorithmic decision-making is crucial to preventing the spread of extremist content.

According to Ed Saperia, dean of the London College of Political Technology, “controversial content drives engagement. Extreme content drives engagement.” This creates a toxic environment where users are incentivized to create content that is likely to generate engagement, rather than content that is accurate or informative.

The Impact of Social Media on Public Safety and Community Cohesion

The spread of extremist content on social media has real-world consequences, including inciting violence and fueling racial tensions. Social media platforms must take responsibility for addressing these issues and promoting public safety and community cohesion.

At Morningpicker, we believe that social media platforms must navigate these complexities carefully, ensuring that they strike the right balance between regulation and user freedom. This requires a nuanced approach that takes into account the complexities of online discourse and the need for regulation to protect users from harm.

The TikTok Ban and Online Migration

The TikTok Ban and the US-China Tech War

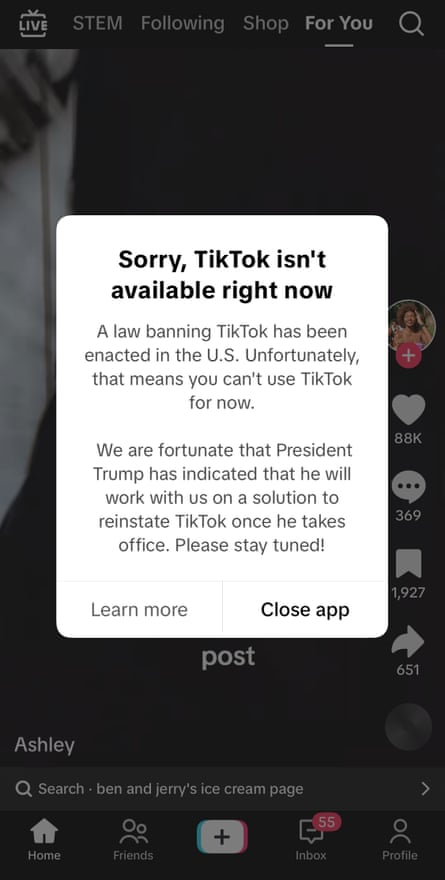

The ban on TikTok in the US is part of a broader tech war between the US and China, with implications for global internet freedom and regulation. The move reflects growing concerns about the spread of Chinese influence and the potential security risks posed by foreign-owned technology companies.

At Morningpicker, we recognize the complexities of the US-China tech war and the implications for global internet freedom and regulation. However, we also acknowledge the need for regulation to protect users from harm and the potential risks associated with foreign-owned technology companies.

The Potential Consequences of a Global TikTok Ban

A global ban on TikTok could have significant consequences for users, including the loss of a popular platform for self-expression and creativity. However, the ban could also serve as a model for regulating other foreign-owned tech companies and promoting online safety and security.

According to a recent report, TikTok’s ban in the US has been five years in the making, with various members of Congress proposing measures that would do the same. The Protecting Americans from Foreign Adversary Controlled Applications Act became law, mandating TikTok be sold or be banned.

The Need for a Balanced Approach to Regulation and Free Speech

The TikTok ban highlights the need for a balanced approach to regulation and free speech, one that protects users from harm while also preserving online freedom and creativity. Social media platforms must navigate these complexities carefully, ensuring that they strike the right balance between regulation and user freedom.

At Morningpicker, we believe that social media platforms must take responsibility for addressing these issues and promoting online safety and security. This requires a nuanced approach that takes into account the complexities of online discourse and the need for regulation to protect users from harm.

Conclusion

safe